Tokenization In NLP / PSQL / Merging data

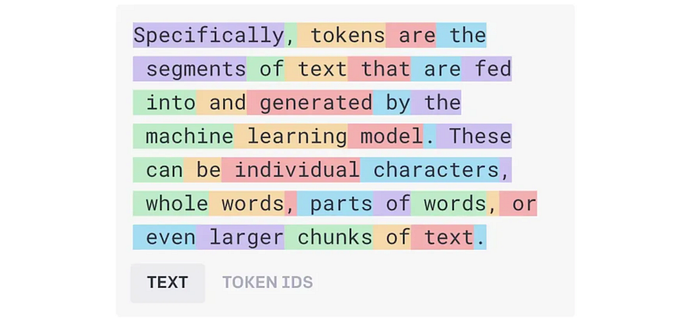

Initializes a vocabulary list based on every character

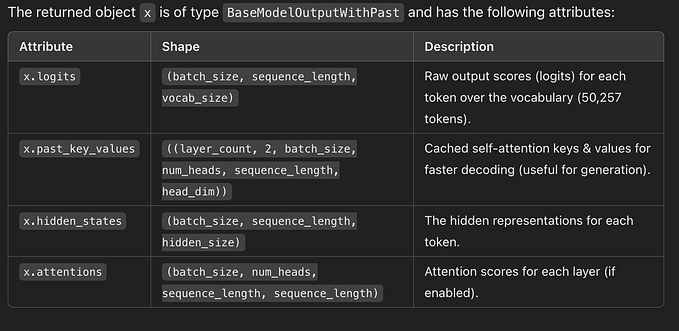

Each element in the vocabulary list is called a token

Appends the vocabulary list based on an algorithm until we reach a desired vocabulary size

The algorithm is recursive

First iteration merges the individual characters into pairs, and these are added as tokens into the vocabulary list

Susequent iterations merge tokens

# Byte-Pair Tokenization

Merge Rule :

Merge tokens with highest frequency of adjacent occurence

# Word Piece Tokenization

Merge Rule :

#1) Merge tokens with the highest probability of occurence together

#2) Normalize by the probability of ocurrence of token 1 and probability of occurence of token 2

# Sentence Piece Tokenization

- Treats spaces as characters

- Can be applied to languages that don’t use spaces to divide words (Chinese, Japanese).

- Note that sometimes Sanskrit merges words and there are rules to merge.

Paper on tokenization https://aclanthology.org/2021.emnlp-main.160.pdf

- Uses greedy longest-match-first strategy

Leet-code level concepts:

Split by word and space, aka “pre-tokenize”. Only used in BytePairEncoding (BPE) and WordPiece.

Substrings

Regex matching

Parallel subword tokenization schemes:

- Byte-Pair Encoding (BPE) (Schuster and Nakajima, 2012; Sennrich et al., 2016)

- SentencePiece (Kudo, 2018)

- WordPiece (Google, 2018)

5 min Video on tokenization

Implementation for sentence transformers: https://sbert.net/

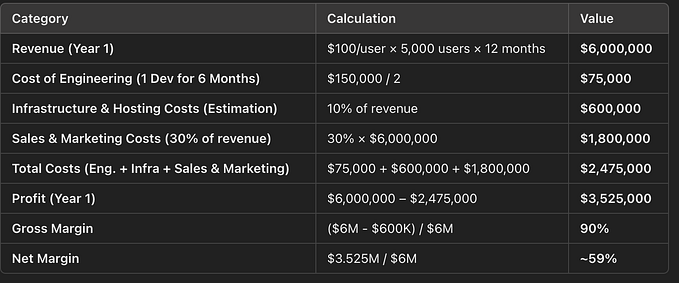

Use tokenization and embedding models to merge databases.

Join tables on column names with similar words (when value is not exactly the same, as common when different people enter data or different datasets are merged).

Default structure in postgresql:

Custom tables:

Select rows based on a condition: Select all columns from table rivers where pollution is unknown.

Link for benchmarking performance in psql db: https://www.postgresql.org/docs/current/pgbench.html