Sequence of papers that train or optimize models for text data

(not exclusively text data)

triplet loss function

https://arxiv.org/pdf/1810.04805 (s-bert)

use of data preceding and succeeding a location in a sequence to train, i.e. bidirectional training

https://arxiv.org/pdf/1810.04805 (bert)

Positional encoding to parallelize training

https://arxiv.org/pdf/1706.03762 (attention and transformer model)

Layer normalization vs. batch normalization

https://arxiv.org/pdf/1607.06450 (layer normalization)

— X —

SONAR paper: “ran an extensive study on the use of different training objectives, namely translation objective (MT), auto-encoding objective (AE), denoising auto-encoding objective (DAE) and Mean Squared Error loss (MSE) in the sentence embedding space”

- 4 different objective functions, although cross-entropy and NCE not included.

- check with mathematical form to see if one of the other ones is equivalent.

- “auto-encoding affects the organization of the sentence embedding space”

- how do they evaluate the organization of the embedding space?

Concepts from SONAR and text models / entity extraction

- “main difference with classical sequence-to-sequence model is the bottleneck layer, or pooling function, that computes a fixed-size sentence representation between the encoder and the decoder” → embedding models have a fixed size output

Why does it make sense to linearly add objective functions? Is there some scaling factor to ensure the different terms have similar magnitude? What happens when you add terms linearly? Can they cancel out? Are you still capturing the intention of each?

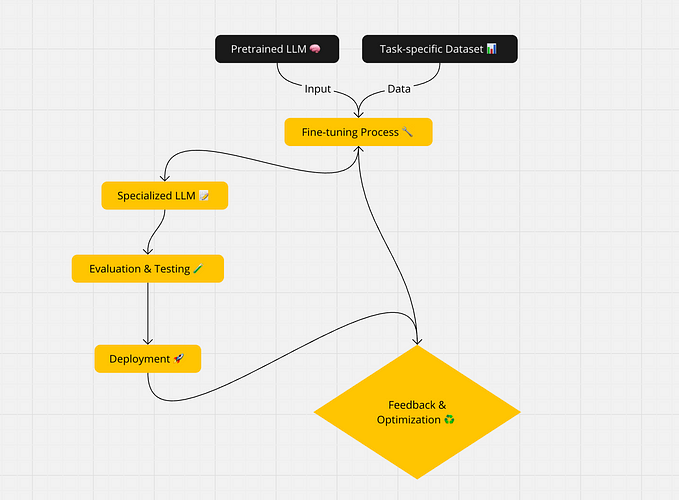

Knowledge distillation, aka teacher-student training technique: from darmstadt, germany , https://arxiv.org/pdf/2004.09813 —

…speaks about using an existing encoding model, to encode new data, data that was not previously in the training distribution. Then, there are some steps to train a model for new data. How is this different from fine-tuning?

How to be quick about learning representations of data that’s not in the training data?

vs. using a large model to make a smaller model and only representing a subset of information [for lang specific, or domain-specific application] (both are useful, although first is more magical?). not going deeper into a domain and thereby extending knowledge. rather limiting to a domain, even if its shallow set of knowledge.

- “[denoising auto-encoding (DAE) + MSE loss improves decoding tasks, but too much of it affects the language-agnostic representations]”

- Granite — coarse-grained igneous rock composed mainly of quartz, feldspar, and mica.

- Dolomite — carbonate mineral and rock composed of calcium magnesium carbonate.

- Calcite — carbonate mineral made of calcium carbonate (CaCO₃).

- Hematite — iron oxide mineral, often with a reddish streak.

- Malachite — green copper carbonate mineral.

- Graphite — carbon used in pencils and as a lubricant.

- Halite — rock salt, composed of sodium chloride.

- Apatite — group of phosphate minerals, often green or yellow.

- Rhyolite — volcanic rock rich in silica, similar to granite.

- Sodalite — blue mineral often used in jewelry.

A vector representing an image and a vector representing piece of text (phrase, para) are solved for together using the contrast loss function. This is similar to training for bi-lingual pairs, or Q/A pairs, or training any other data with each row representing linked pairs, and data in different rows dissimilar to each other.

CLIP https://arxiv.org/pdf/2103.00020

builds on “Contrastive Learning of Medical Visual Representations from Paired Images and Text” https://arxiv.org/pdf/2010.00747

which uses

bidirectional contrastive objective

cross-modality NCE objectives

In the Gloria 2021 paper, also for medical images and text mapped to similar semantic space, there is a global contrastive loss and a local contrastive loss.

for

“three chest X-ray applications (i.e., classification, retrieval, and image regeneration)”

how: by fitting

a parameterized image encoder function fv

for their choice of a fitting function, they choose a CNN (convolution neural network), reasonable as the dataset consists of images.

more specifically: ResNet50

binary cross-entropy loss

- Adam optimizer (Kingma and Ba, 2015)

- initial learning rate of 1e-4

- weight decay of 1e-6

- batch size of 32

- validation loss every 5000 steps

Pre-processing image data:

- zero-pad the input image to be square, and then resize it to be 224×224

Also on images and text:

https://openaccess.thecvf.com/content/ICCV2021/papers/Huang_GLoRIA_A_Multimodal_Global-Local_Representation_Learning_Framework_for_Label-Efficient_Medical_ICCV_2021_paper.pdf — introductory material in this paper repeats from the ConVirt paper. Unique contribution is segmenting images to look at sub-sections where the feature of interest might be, they call it global vs. local.

- type errors (mostly taken care of by tokenization / embeddings models, je pense)

- long-range context dependency

so they propose

- “token aggregation”

- a self-attention (probability) model variation. The scale/volume of the context they are considering is multi-sentence reasoning.

Also use ResNet50 for extracting image features. It’s not necessarily explained why ResNet50.

- the final layer of ResNet50 is an adaptive pooling layer

- an intermediate layer is a convolution layer

Data access is free, requires 1 week of training + agreement. https://physionet.org/content/mimiciii/1.4/

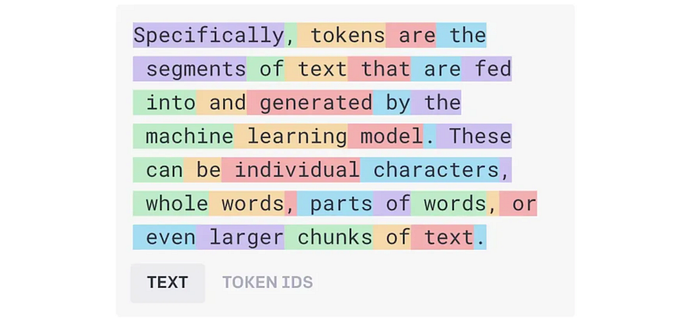

Image encoding and Text encoding (including tokenization strategy) are explained.